Hongyu Liu

PhD Candidate

Taken at a real-life location from SteinsGate (my favorite anime).

I am a third-year (final-year) Ph.D. student in the Department of Computer Science and Engineering at The Hong Kong University of Science and Technology HKUST, where I am fortunate to be advised by Prof. Qifeng Chen. My research focuses on a range of topics in computer vision and graphics, including 2D/3D content generation, digital humans, neural rendering, and video generation. I am particularly interested in building intelligent systems that can understand and synthesize realistic visual content of human or avatar, bridging the gap between the physical and digital worlds.

Beyond research, I’m deeply passionate about fishing—not just as a hobby, but as a personal dream. I aspire to one day travel across China to fish in its diverse rivers, lakes, and coastal waters, using the journey as a way to connect with nature, explore local cultures, and find inspiration outside the lab.

News

| Sep 28, 2025 | HeadArtist-VL is accepted to TPAMI 2025. |

|---|---|

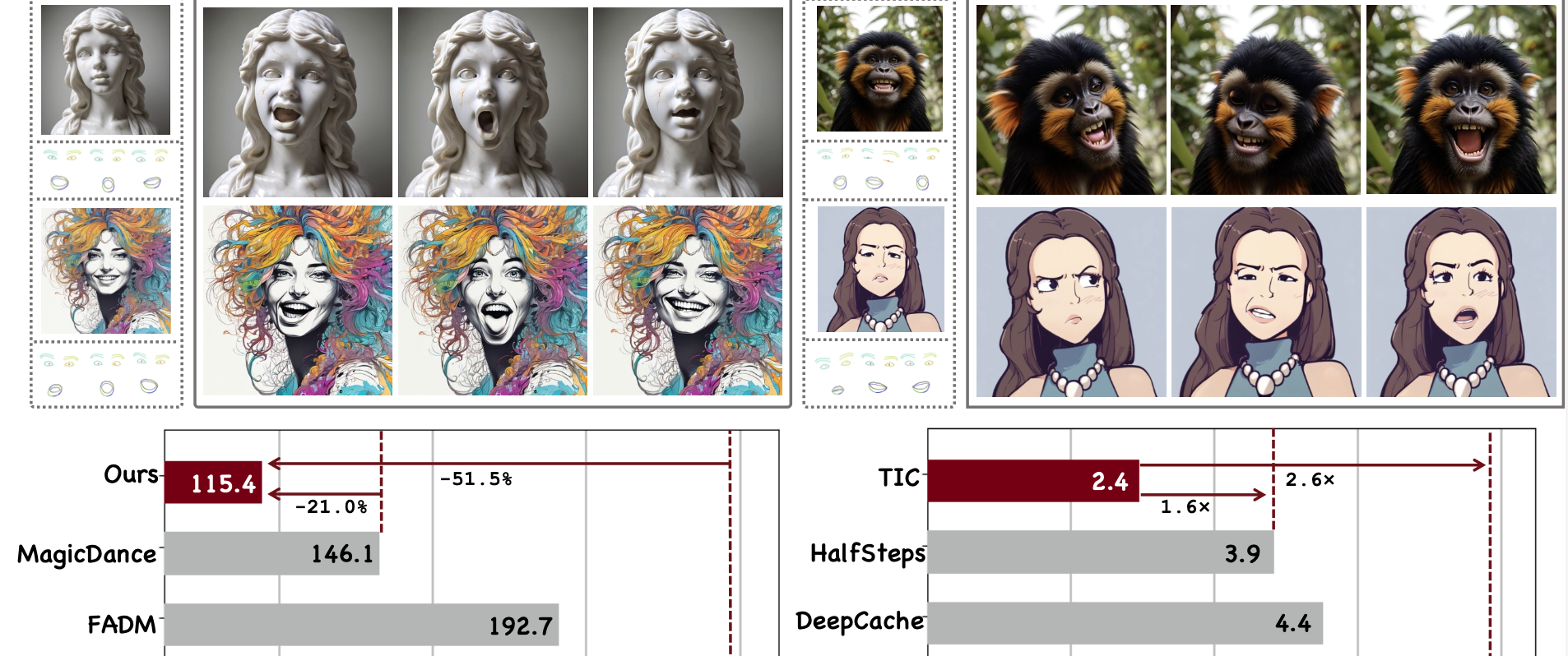

| Aug 20, 2025 | Follow-Your-Emoji-Faster is accepted to IJCV 2025. |

| Feb 26, 2025 | AvatarArtist is accepted to CVPR 2025. |

| Jul 01, 2024 | Follow-Your-Emoji paper is accepted to SIGGRAPH Asia 2024. |

| May 01, 2024 | We released a survey paper:LLMs Meet Multimodal Generation and Editing: A Survey. |

Recent 3 Publications

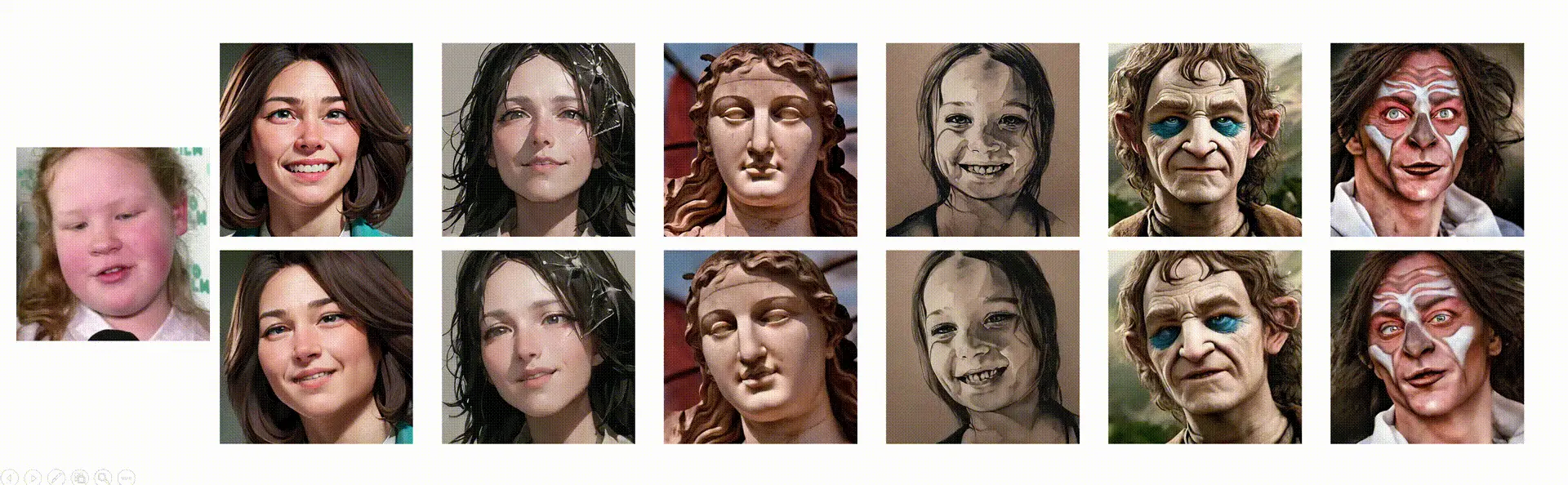

- HeadArtist-VL: Vision / Language Guided 3D Head Generation with Self Score DistillationIEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025